Program Dates: May 27, 2009 – August 1, 2009

SPIRE-EIT is a 10-week research experiences for undergraduates program that combines classroom training with hands-on research projects. .

Funded by NSF Grant IIS-0851976.

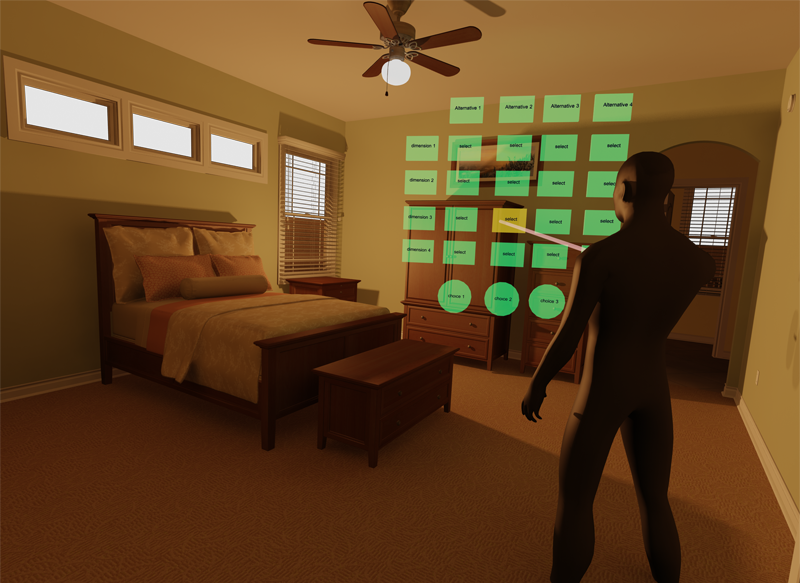

The Effect of Interface Domain on Decision Making Experience

Abstract: Decision processes are difficult to capture in the natural environment. Nearly all analyses of high risk decision making are gathered through retroactive interviews are subjected to bias, story telling effects, and memory loss. To address this problem we created a virtual environment to simulate the high risk scenarios, where an emergency responder’s decision making processes can be safely monitored in real-time while providing a naturalistic setting for the responders to interact with the environment.We are particularly interested in firefighters’ decision making processes and have built a realistic environment that is simulating various emergency fire situations for this purpose. This fire simulation environment was created to run in ISU’s C6 CAVE to utilize the high processing power and visual fidelity that the C6 provide. However, another option for emulating high risk scenarios is through augmented reality where a virtual stimulus is overlaid on top of the physical environment. Instead of modelling a building and simulating a fire on that building in a VR CAVE, it might be possible to establish an augmented reality to overlay fire and our virtual decision making capturing tool over actual buildings.We are interested in translating aspects of our decision making process capturing tool software to an augmented reality system and evaluate its effectiveness, advantages and future applications in the realm of studying decision processes.

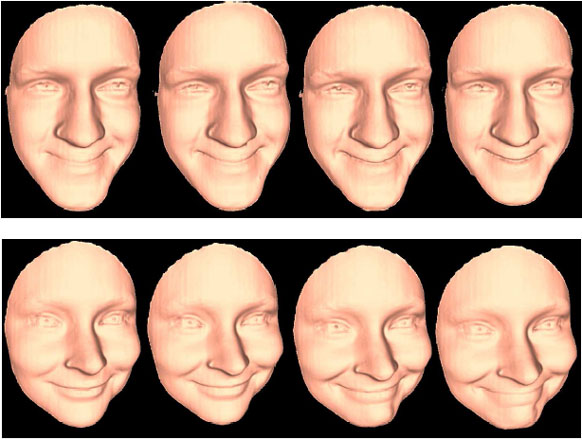

Markerless Motion Tracking

Abstract: Current motion tracking typically involves multiple cameras, markers, or other sensors. This project focuses on markerless motion tracking using a single camera and mathematical prediction models and computer vision techniques to track, for example, eyes, mouth, hands in real time with high precision. This challenge is particularly important for facial features because the brain’s face detection systems are so fine tuned. When implemented successfully, a system like this can be used by a human to control an avatar much like a real-world, highly-realistic puppet.Marker-based tracking is commonly used in films and video games. The technology they commonly used is known as “Motion Capture”, or “Mo-Cap”. The physical markers have to been put on real human body or face, multiple cameras are used to capture the markers and the movement of the marker (motion), and the computer is used to generate 3D model having the same motion at the marker locations. This means that only the geometry motion at the marker points are known, while the rest has to be animated by the computer (or the modeler). Even though it is a very painful process for movies and games, they still used until recently, such as Polar Express, and Beowulf. Recently, Mova recently developed a less painful motion capture technology, which sprain random patterns “speckles” on human face (or body). It is a much better technology that captures more detailed motion. The most recent movie Benjamin Button used this technology for their movie. However, it is still a marker-based technology.Goal: The research objective is to develop a tracking system without using markers. A single camera is used to capture the motion, and the mathematical prediction models and computer vision techniques to track to the motion and generate the photo-realistic animated 3D models. This technique is very useful especially when we combine this tracking technique with my 3D motion capture system (see my lab), when both 2D images and 3D geometries are captured at the same time. Below shows typical frames of human facial expressions captured by my system.

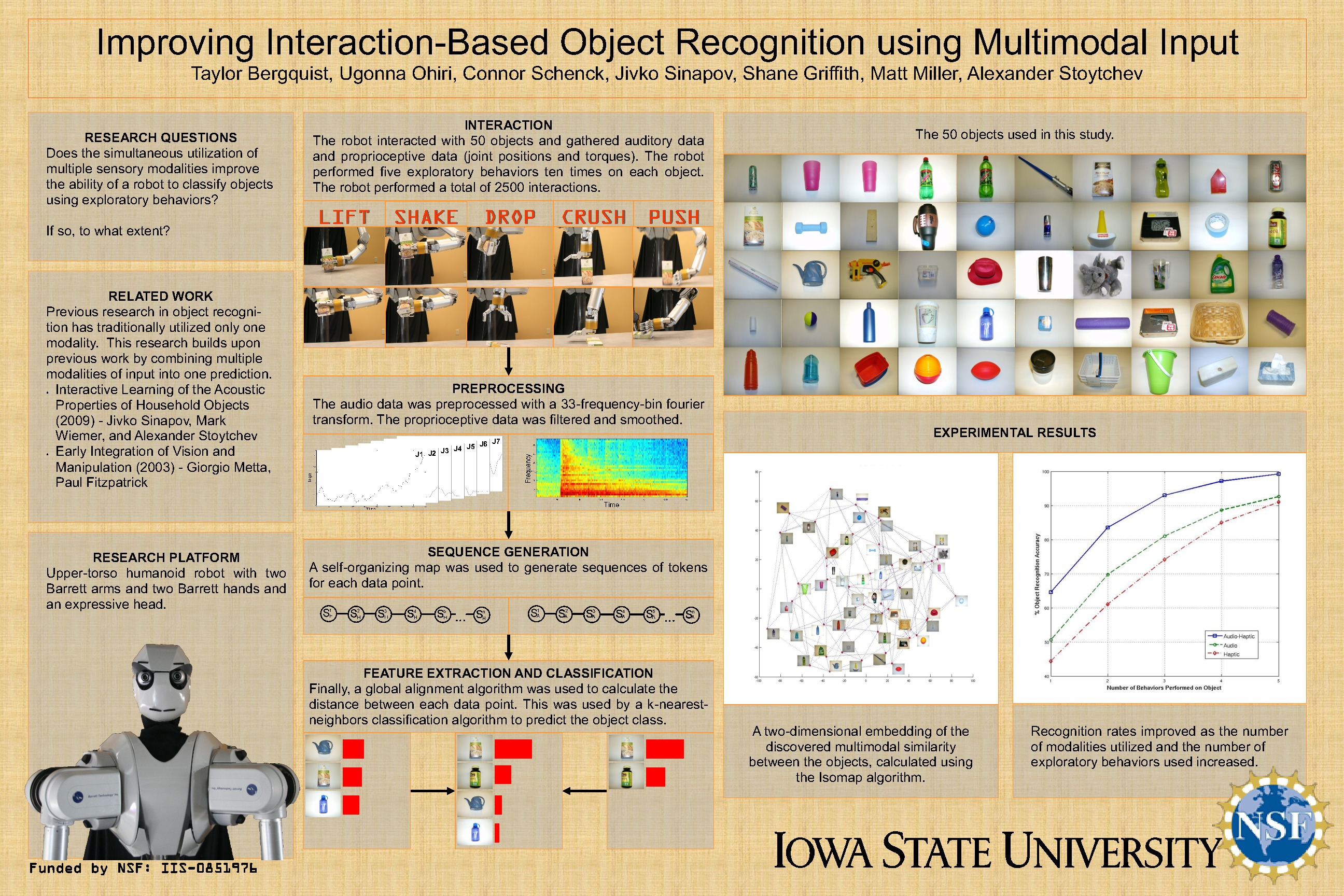

Developmental Robotics

Abstract: The REU students in this group will be expected to formulate a research project on their own with some help from the faculty and graduate students at the Developmental Robotics Laboratory. Furthermore, the REU students will be expected to perform a large-scale experimental study with a state of the art upper-torso humanoid robot and summarize their results in a research paper that will be submitted to one of the top conferences in robotics. The research topic is flexible as long as it is aligned with the mission of the lab.Development Robotics Lab: http://www.ece.iastate.edu/~alexs/lab/

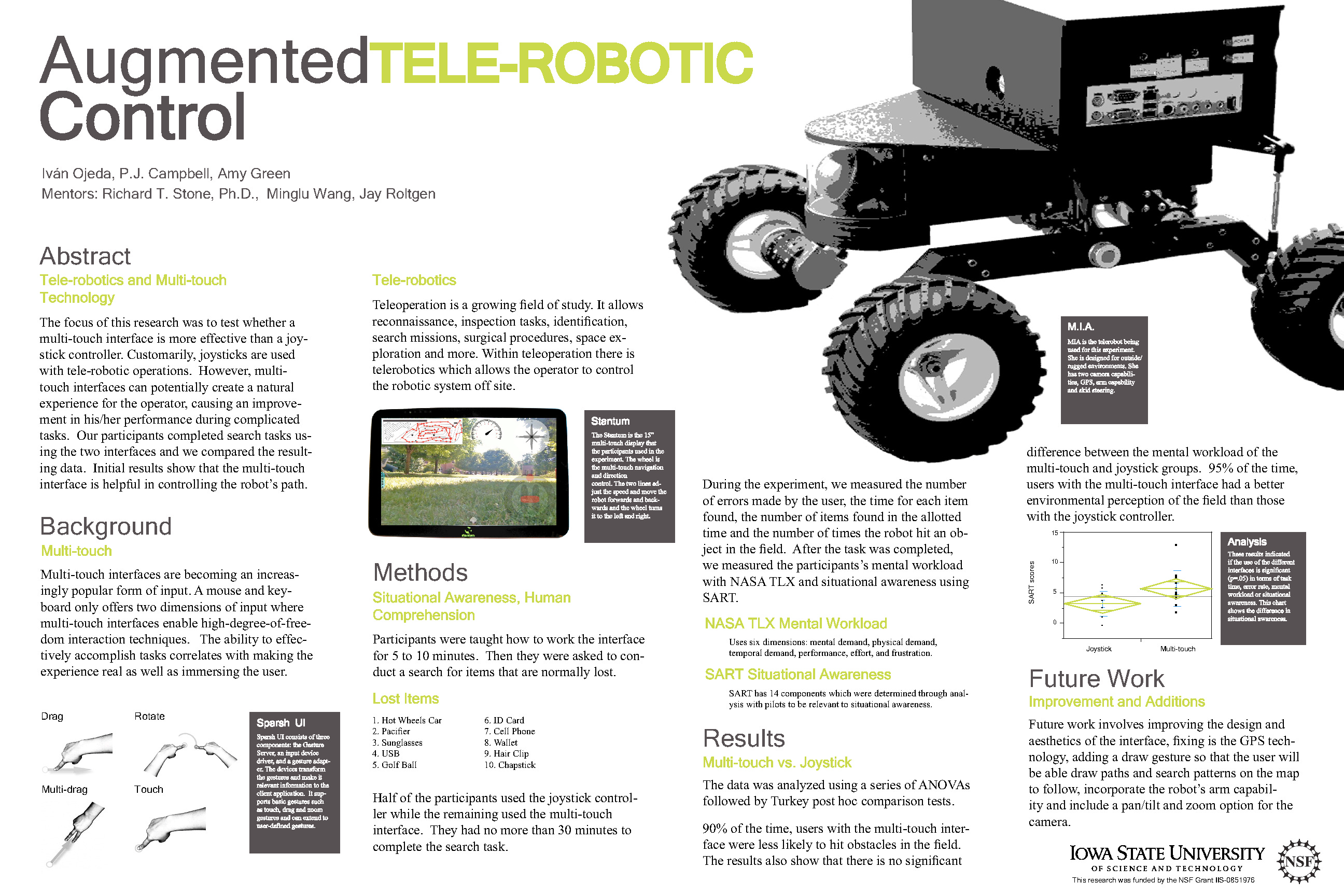

Augmented Tele-robotic Control

Abstract: High fidelity telepresence is considered to be a key factor for the development of highly effective telerobotic systems. The focus of this work is the integration of augmented reality (and or multi-touch technology) and visualization tools to create a control system that will enhance a humans’ ability to effectively perform the complex telerobotic operation of target acquisition and collection. Students assigned to this project will work with an actual long range high performance telerobotic reconnaissance robot; they will be tasked with designing and implementing a multitouch interface system that will effectively enhance human comprehension and sense of presence. The group will learn some of the physiological and cognitive factors that are considered when designing for telepresence and how to implement these designs into testable prototypes. Following implementation the students will be exposed to experimental design concepts and participate in actual human subject testing of the groups design. During testing the students will learn how to perform basic measures of human performance. Following testing the data will be analyzed and results will form the bases of our conference paper.Telerobotics Testing Photos

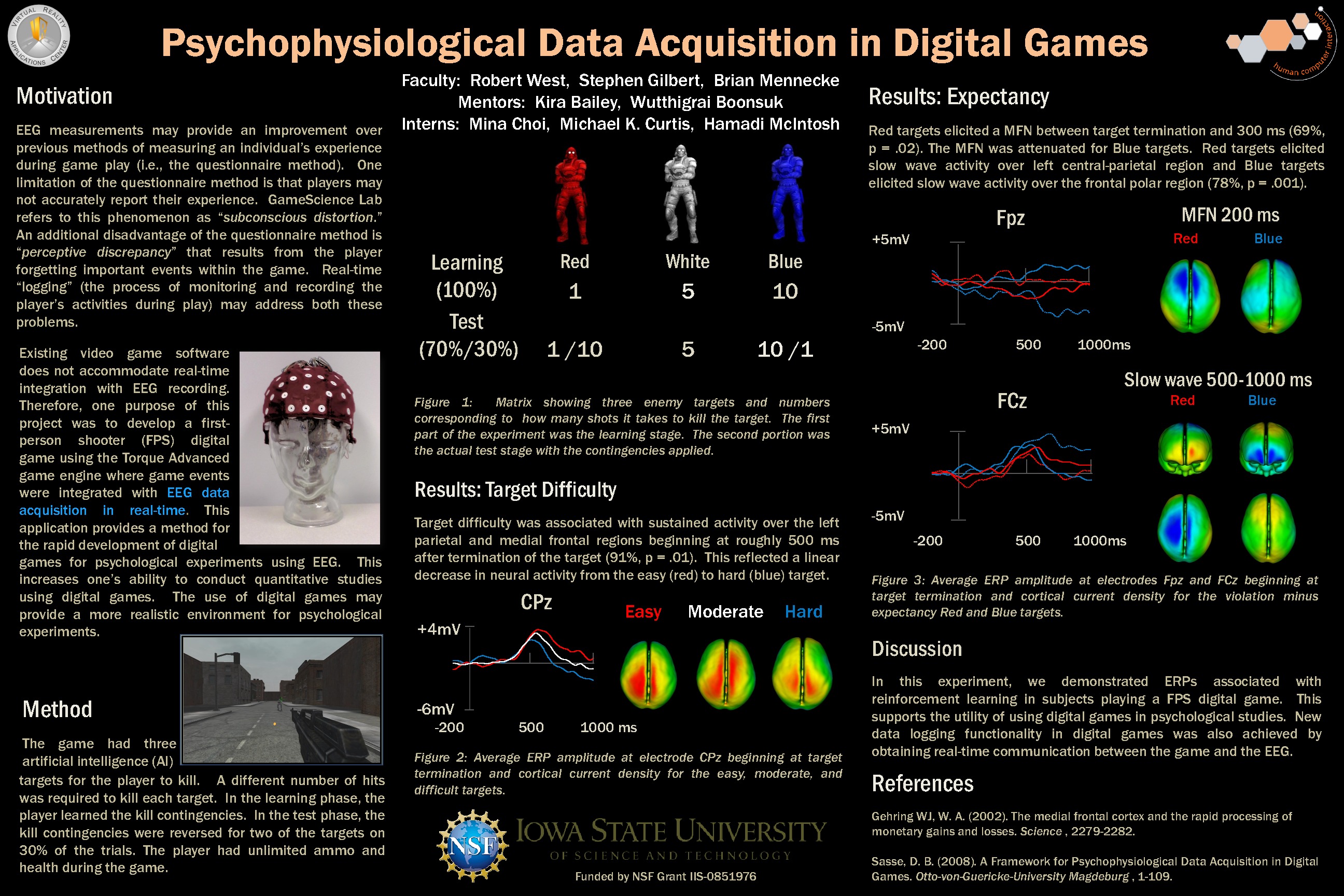

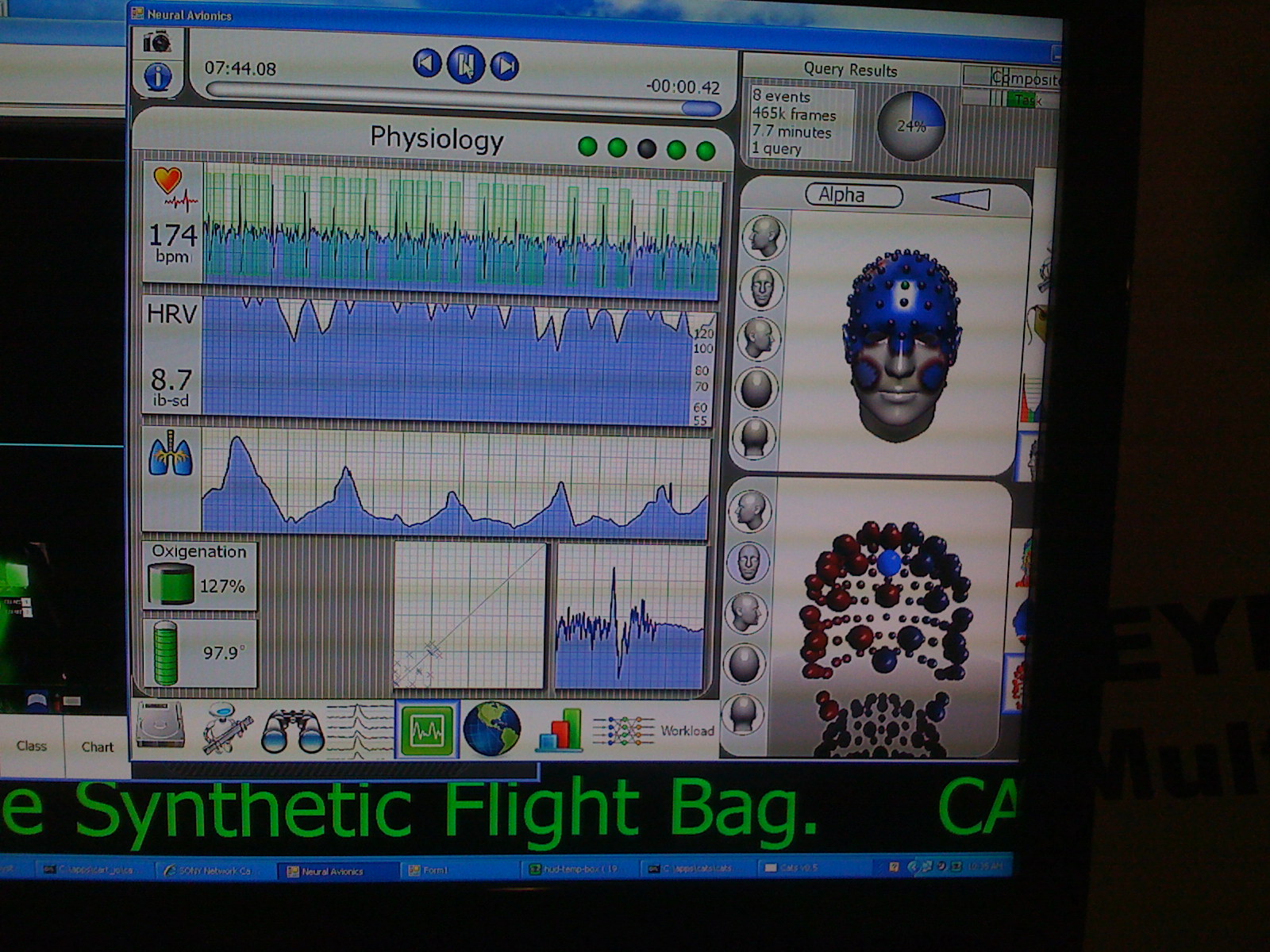

Psychophysiological Data Acquisition in Digital Games

Abstract: The project is developing a data-logging architecture so that analog psychophysics devices like EEG, galvanic skin response systems, eye-trackers, etc, could be time-synced with a computer running a video game. Usually the psychophysics system is sampling at a greater frequency than the computer, and it’s analog instead of digital, so it’s difficult to match u p events like brainwaves in an EEG to events in the game like shooting an enemy. Once completed this system will enhance research on brain-machine interfaces and the effects of game environments on behavior, attitudes, and learning.Goal Integrate a video game with EEG in real time.

p events like brainwaves in an EEG to events in the game like shooting an enemy. Once completed this system will enhance research on brain-machine interfaces and the effects of game environments on behavior, attitudes, and learning.Goal Integrate a video game with EEG in real time.

Invalid Displayed Gallery